2025年CCF形式化方法专委会青年学术论坛——大模型辅助的程序分析与验证专题研讨会

杭州市西湖区余杭塘路866号浙江大学紫金港校区海纳苑4幢(理学部大楼)101会议室

此次研讨会聚焦大模型技术与程序分析和验证领域的深度结合,围绕“大模型时代下程序分析与验证的理论与实践”展开前沿探讨。论坛旨在探索大模型技术为形式化方法带来的新范式与新工具,推动程序自动化分析、智能验证与系统安全保障的技术突破。此次研讨会的成功举办将为该领域的研究者和从业者提供一个交流合作的平台,激发创新思维,推动形式化方法与AI结合的技术创新和应用落地,为行业发展提供新的思路和方法。

主办单位:中国计算机学会

承办单位:中国计算机学会形式化方法专委会、浙江大学

组织委员会:

- 吴志林,中国科学院软件研究所

- 沈博,西北工业大学

- 陈明帅,浙江大学

- 王竟亦,浙江大学

- 姚培森,浙江大学

腾讯会议链接:https://meeting.tencent.com/dm/HXC1y2dy00if

直播链接:http://live.bilibili.com/24106724

会议日程

| 报告人 | 报告主题 | 时间 |

|---|---|---|

| 论坛开幕 | 09:00-09:15 | |

| 张业迪(新加坡国立大学) | Towards Trustworthy AI Agents: Integrating Large Language Models with Formal Methods | 09:15-10:00 |

| 茶歇 | 10:00-10:30 | |

| 时清凯(南京大学) | 基于分治的可靠大语言模型应用 | 10:30-11:15 |

| 李屹(华为操作系统部OS内核实验室) | 大模型驱动的泛形式化软件工程实践 | 11:15-12:00 |

| 午休 | 12:00-14:00 | |

| 刘尚清(南京大学) | 基于大语言模型的程序规约理解与生成 | 14:00-14:45 |

| 曹嘉伦(香港科技大学) | From Informal to Formal – Incorporating and Evaluating LLMs on Natural Language Description to Verifiable Formal Proofs | 14:45-15:30 |

| 茶歇 | 15:30-16:00 | |

| 李昊坤(华为2012可信费马实验室) | SMT(NRA)高效求解:结合局部搜索,MCSAT和openCAD的混合方法 | 16:00-16:45 |

| 论坛闭幕 | 16:45-17:00 |

报告摘要: Large Language Models (LLMs) have emerged as a transformative AI paradigm, profoundly influencing broad aspects of daily life. Despite their remarkable performance, LLMs exhibit a fundamental limitation: hallucination—the tendency to produce misleading outputs that appear plausible. This inherent unreliability poses significant risks, particularly in high-stakes domains where trustworthiness is essential. On the other hand, Formal Methods (FMs), which share foundations with symbolic AI, provide mathematically rigorous techniques for modeling, specifying, reasoning, and verifying the correctness of systems. These methods have been widely employed in mission-critical domains such as aerospace, defense, and cybersecurity. However, the broader adoption of FMs remains constrained by significant challenges, including steep learning curves, limited scalability, and difficulties in adapting to the dynamic requirements of daily applications. To build trustworthy AI agents, we argue that the integration of LLMs and FMs is necessary to overcome the limitations of both paradigms. LLMs offer adaptability and human-like reasoning but lack formal guarantees of correctness and reliability. FMs provide rigor but need enhanced accessibility and automation to support broader adoption from LLMs.

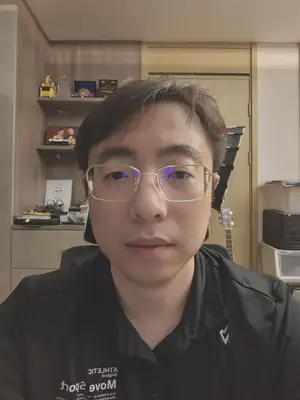

报告人简介: Yedi Zhang obtained her Ph.D. in 2023 from ShanghaiTech Univeristy, where her research focused on the formal verification of neural networks and received the CCF outstanding doctoral dissertation award in formal methods. After that, she joined National University of Singapore, Singapore, as a postdoc to continue on verification and validation of AI systems. Her current research explores the integration of formal methods with large language methods to advance the development of trustworhty AI agents. She serves and has served as a program committee member or reviewer for various conferences/journals (e.g., CAV, FAC, ATVA, TASE, ICSE, TOSEM, APSEC, ICLR, NeurIPS, ACM MM) in the fields of formal methods, software engineering, and artificial intelligence. More infomration at: https://zhangyedi.github.io/.